Large language models like ChatGPT have been adopted in software engineering more than in any other field.1 From the outside, this might seem surprising: why does a chatbot help with programming? These models aren't trained with any particular grammar, so they can learn any language. And programming languages are easy for these models to learn, because there is a lot of code on the internet to learn from, and because everything has to be spelled out in the code so there's no implicit context. So even generic language models are surprisingly good at writing code. And perhaps more importantly, they have a receptive audience, because software engineers like using cool new technology.

You can read all sorts of thinkpieces on how generative AI has changed coding for good, and it's true—this will be a huge change in how software gets made. So you might think that everything we used to know about coding, working in software, and managing engineers is now obsolete.

But programming advice is surprisingly evergreen! Even today, one of the most-cited books for working in a software organization is The Mythical Man-Month, a series of essays by Frederick Brooks originally published in 1975.2 Even if you're really bullish on generative AI, I don't think you'd say it's a greater revolution than everything that happened in tech from 1975 to 2022. So if this book's advice has held up for that long, it's a good bet it will remain useful in this new era.

Three lessons in particular stand out:

A working program is not enough

Even in the early days of coding, Brooks writes, it was pretty easy for one person to quickly write a program—code that does what you want it to do. If you're making a cool personal project, that's all you need. But if you're a company looking to build a business, he says, that's not enough:

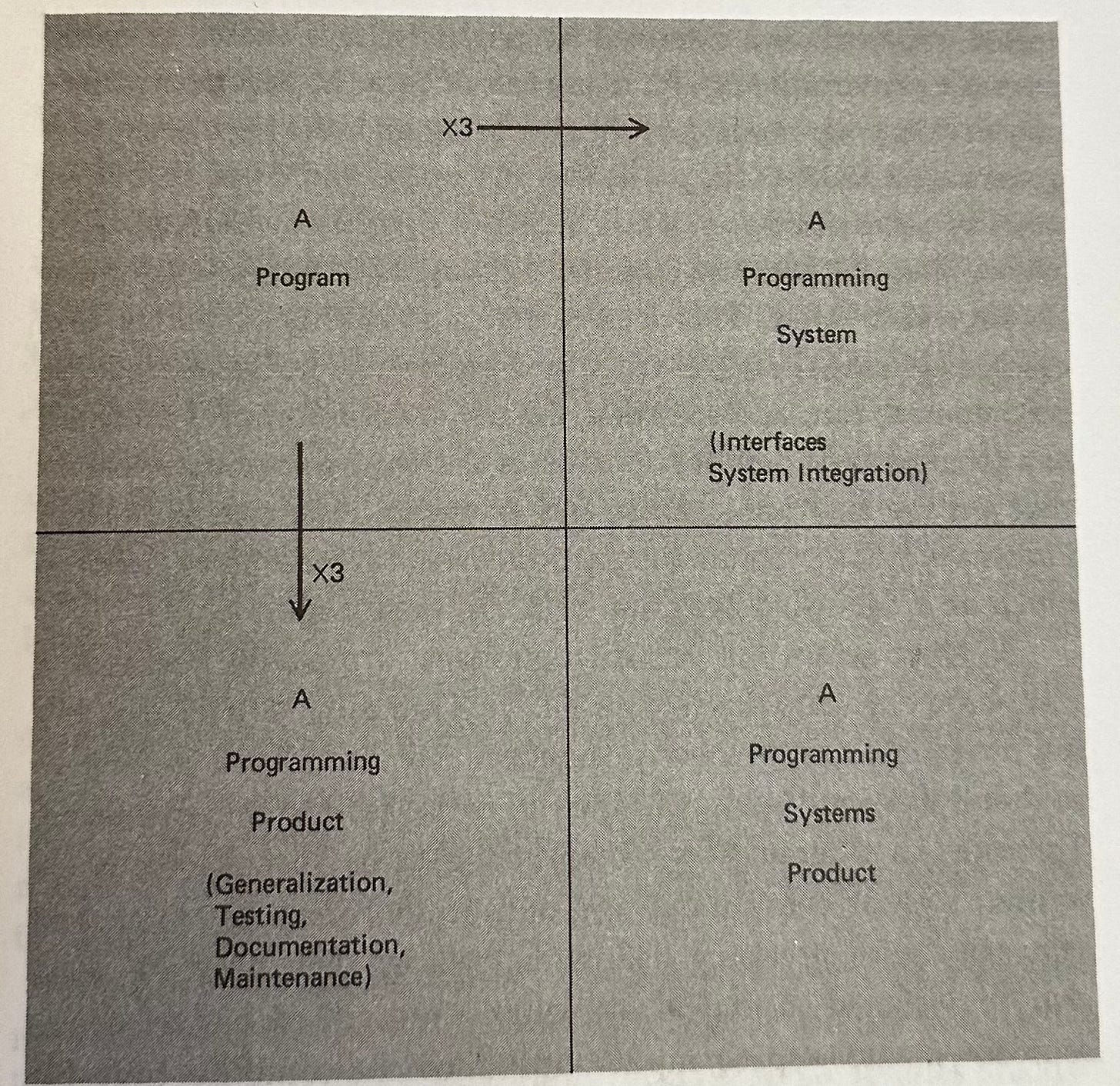

It needs to be a programming system—a program that's able to interact with other programs. That includes designing a clear interface so others can use it, testing how it interacts with other programs, optimizing how fast it runs, and aligning syntax with its consumers.

It needs to be a programming product—a program that others can understand, troubleshoot, and customize. That includes adding tests so others don't break it, documenting how it works, and generalizing anything that's too specific or unexplained.

Creating either a programming system or a programming product takes three times as much work as creating the original program; doing both takes nine times as much work.

ChatGPT is amazing at making programs. It can write surprisingly complex code from simple instructions that mostly works; it can help you debug any remaining issues; and it can even do a lot of surprisingly annoying things like telling you how to set up an environment that makes your code actually run.

But examples of how ChatGPT can write code almost always stop at the isolated program. Most code written today—and approximately all code you can get paid for writing—is a programming systems product. If you believe Brooks' framework, that means most of the work of programming is still in human hands.

Is that just temporary? AI models can certainly help on system/product-level work—write automated unit tests for a function, add documentation to a program, generalize it to work for different inputs—but even those tasks arguably need more human oversight, because you can't just run the program and check if it does what you wanted. For other requirements, such as integration testing or making code easier to change in the future, ChatGPT really can't do much to help you. And in some ways it even hurts, because to the extent that ChatGPT writes code less thoughtfully or consistently than humans, it creates technical debt that's hard to unwind.

In the very long run, maybe generative AI capabilities will totally obsolete Brooks' framework. If ChatGPT-7 can write any code imaginable correctly, then the need for maintainable code might become a relic of the past (the same way “memory optimization” used to be really important but can be ignored by many programmers today)—as the cost of rewriting code from scratch approaches zero, you can just do that every time you need to change functionality instead of editing existing code. But language models aren't that capable yet, and neither do organizational processes allow for it; imagine reviewing a pull request that's thousands of lines rewritten from scratch just to fix one bug.

"Man-months" are even more mythical today

Brooks' book is most famous for the title essay. He sets up a strawman (in 1970s gendered terminology) of "man-month"-based management, in which tasks are interchangeable between people—if you double the number of programmers working on a project, it can get done twice as quickly. He declares that "mythical"; in his experience, adding people to a project doesn't speed it up nearly that quickly, and may not speed it up at all.

This isn't a universal claim, however. Brooks gives three conditions under which this management approach fails:

Work cannot be perfectly partitioned; for example, the outcome of one module affects how the next one is done. If that's the case, dividing work between more people doesn't help, because those working on later steps still have to wait for the first ones to finish. ("The bearing of a child takes nine months, no matter how many women are assigned.")

People who are just joining a project need time to get up to speed on what's going on. This reduces the amount of time in which they can actually help—doubly so if developers already on the project need to make time to do the training.

Developers need to communicate with each other about how their pieces fit together. The number of potential communication pairs increases faster than the number of people, so adding more people may even slow down progress. (Hence "Brooks' Law: Adding people to a late software project makes it later.")

In short, projects are easy to hand off to new people if the goal is clear, little external context is needed, and the output doesn't affect other tasks. But the same attributes also make those tasks easy to hand off to ChatGPT—you can put what you want in text, fit all the context into a prompt, and isolate it from everything else you're working on. The work that remains will be fuzzier and require more context, making it even harder to add people to a project mid-stream.

Everyone is an "architect" now

A developer on a small team can be much more productive than one on a big team—that’s why members of startups complain that the tech giant that acquired them is too slow, or veterans of those giants wax nostalgic for the early days when they could move faster. Brooks says this is for two reasons.

On a larger team, each developer has to communicate with more people, taking time away from other work.

The more people involved, the less “conceptual integrity” a system has. If one person designs the entire system, they know how everything works, so all the pieces will fit together seamlessly. If multiple people are involved, they may have slightly different visions for how the other pieces should work, so there will be some friction in putting them all together; effective planning and communication can mitigate this somewhat, but not completely.

If small teams are more productive, why don’t startups outcompete the bloated big companies at everything? There are mathematical limits to much a small team can do—even if a 10-person team is 10 times more productive per person than a 1000-person team, it still can’t produce as much in total—and the requirements of enterprise-scale software often exceeds those limits.

The best you can do, Brooks says, is organize large teams in a way that gets as many of the advantages of small teams as possible. He suggests dividing work between architects who design a complete system at a high level and implementors who put components of that system into practice. Because the structure of the whole system is created by one person, it has more structural integrity than one designed by many. And because implementors are working on defined components, most of their communication can be back and forth with the architect, and relatively less with all the other implementors. (Likewise, all discussions about how this system interacts with other systems can be handled by the architect without involving implementors.)3

However, modern tech companies don’t really do this—some senior programmers have an “architect” title, but they also do implementation, and others also contribute to system design.4 That may change in the future, except the “implementor” roles will be filled by robots. That’s because generative AI changes the comparative advantage calculus:

Even as they get more powerful, large language models will make mistakes when implementing systems, and they’ll need oversight; on balance, they’ll still probably be worse than a talented human programmer is today. But they’ll be reasonably close, and they’ll be much faster and cheaper.

On the other hand, they’ll still struggle to design systems. That’s not only because the problem isn’t structured as effectively (systems can’t be captured in language the same way code can, and there aren’t as many publicly available examples to train on), it’s also because it requires context that often isn’t written down at all—what data is expected to come through that system, what changes are being made concurrently to related systems, what are the nuances to how pieces fit together in practice that can’t really be captured by a diagram.

So all software engineers of the future should spend more of their time on “architecture” problems—the ones that ChatGPT can’t answer effectively—and delegate implementation to the robots.

If a lot of today’s software engineering work can be delegated to AI, does that mean developers will lose their jobs? Not necessarily; economics teaches us that as the cost of something (software production) declines, the amount of it demanded will go up. We’ll have even more software in the future, and more people designing it at higher levels.

It does, however, mean that the need for good judgement will be higher. If you implement code badly, someone will probably notice quickly—the program won’t work, or a reviewer will catch edge cases you missed. But if you design a system badly, the problem might not be noticed for a while; maybe it will break as your userbase scales, or maybe it isn’t serving the use case you thought it was. So the skillset of developers will likely change: depth of knowledge in particular languages and speed of execution will matter less; business sense and handling with ambiguity will matter more.

Thanks to OpenAI for resolving its mess without imploding so I don't have to change my ChatGPT clickbait headlines.

There are two ways that advice can remain evergreen: 1) it highlights fundamental truths about the world that remain unchanged; or 2) it highlights truths that would have been temporary and makes them fundamental by influencing how important people think about the world and getting passed down to future generations. I'm not sure which is the case here, but it's impressive either way.

Brooks also argues that the implementation work should be rigidly divided even further, in working groups modeled after surgical teams: one "surgeon" is in charge of writing most of the code; a "copilot" is a sounding board and helper but without final say; a “tester” validates the system; a “clerk” keeps track of all the outcomes, a “toolsmith” provides the right code editing environment and other tools; and a few other people handle administrative work and communications. In the last 50 years, most of these functions have been automated (logging, testing, secretaries) or standardized enough to share within the company or outsource (tooling, administrative work), allowing programmers to operate as teams of one.

Why? Optimistic explanations are that technologies like higher-level languages and code editors made it easier to make system design coherent across larger teams, or that the speed of iteration became more important than system coherence anyway. A pessimistic explanation is that people didn’t like being confined to an implementation role and managers didn’t have the power to make them suck it up.

Perhaps supporting the optimistic explanation, the same link notes that true “architects” are more common in old industries like finance and cars, which operate on slower cycles that are more like 1970s-era software projects.